Future of robotics could include living skin for humanoid machines

By Kurt Knutsson, CyberGuy Report Fox News

Researchers from the University of Tokyo have developed a groundbreaking method to cover robotic surfaces with genuine, living skin tissue. The idea of robots with skin isn’t just about creating a more lifelike appearance. This innovation opens up a world of possibilities, from more realistic prosthetics to robots that can seamlessly blend into human spaces.

As we delve into the details of this research, we’ll uncover how these scientists are bridging the gap between artificial and biological systems, potentially revolutionizing fields ranging from health care to human-robot interaction.

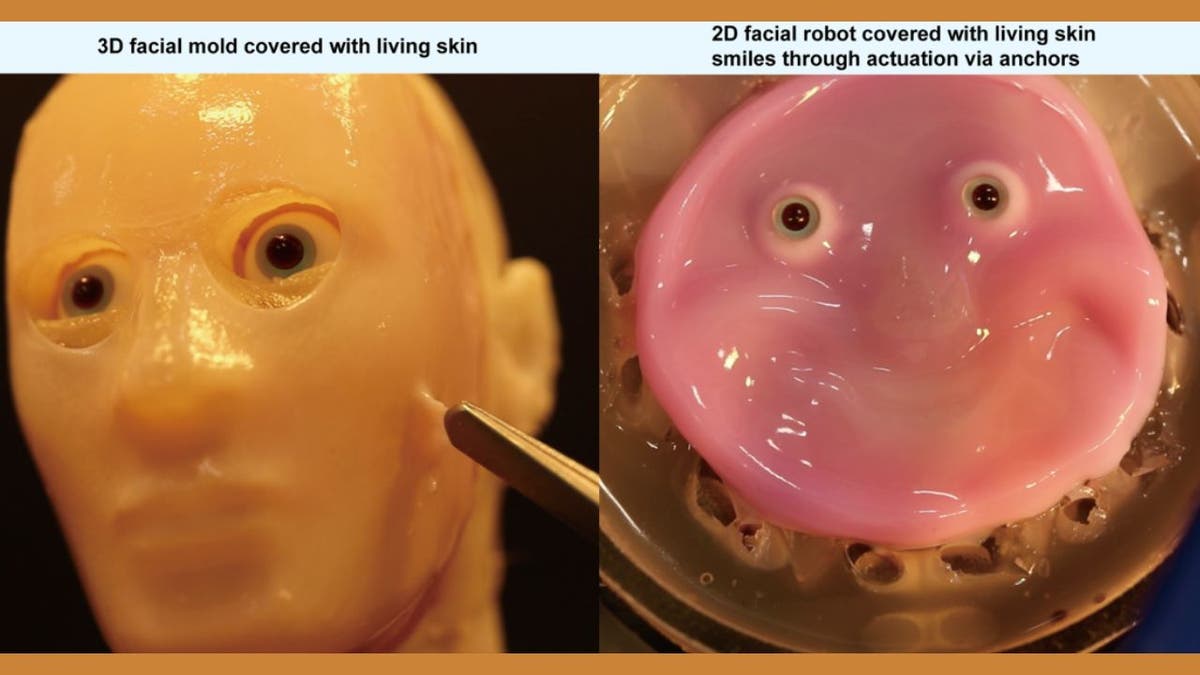

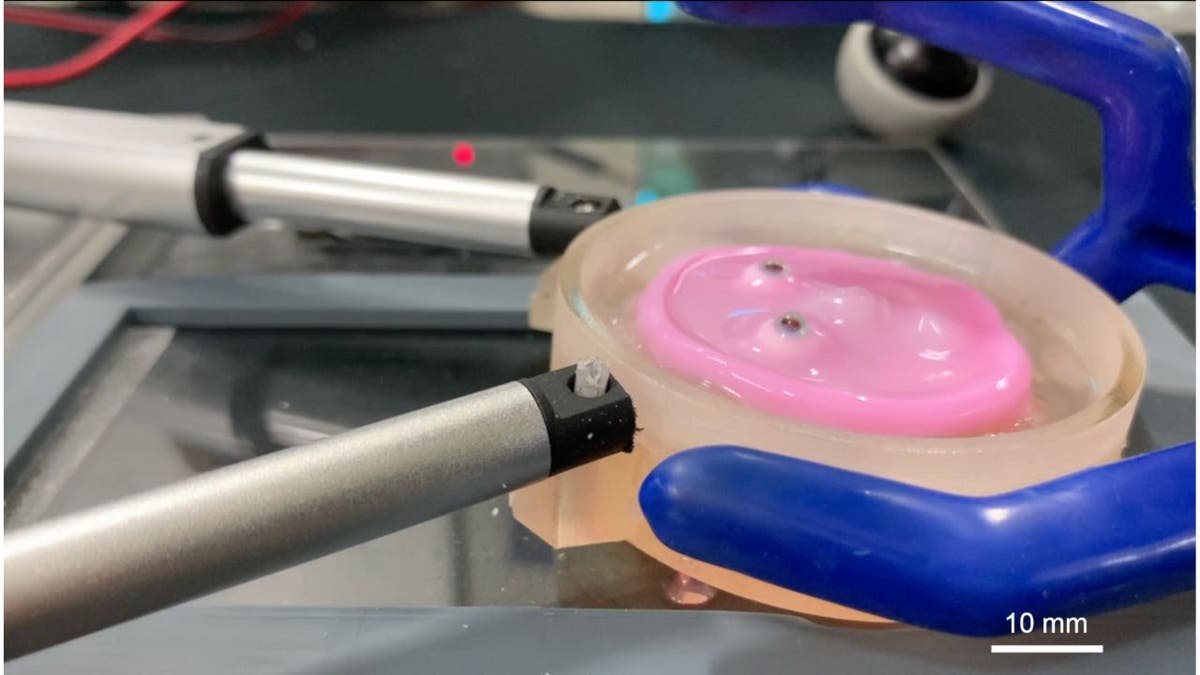

Engineered skin tissue (Shoji Takeuchi’s research group at the University of Tokyo) (Kurt “CyberGuy” Knutsson)

What’s the big deal?

We’re talking about robots that not only look human-like but also have skin that can heal, sweat and even tan. This isn’t just about aesthetics; it’s about creating robots that can interact more naturally with humans and their environment.

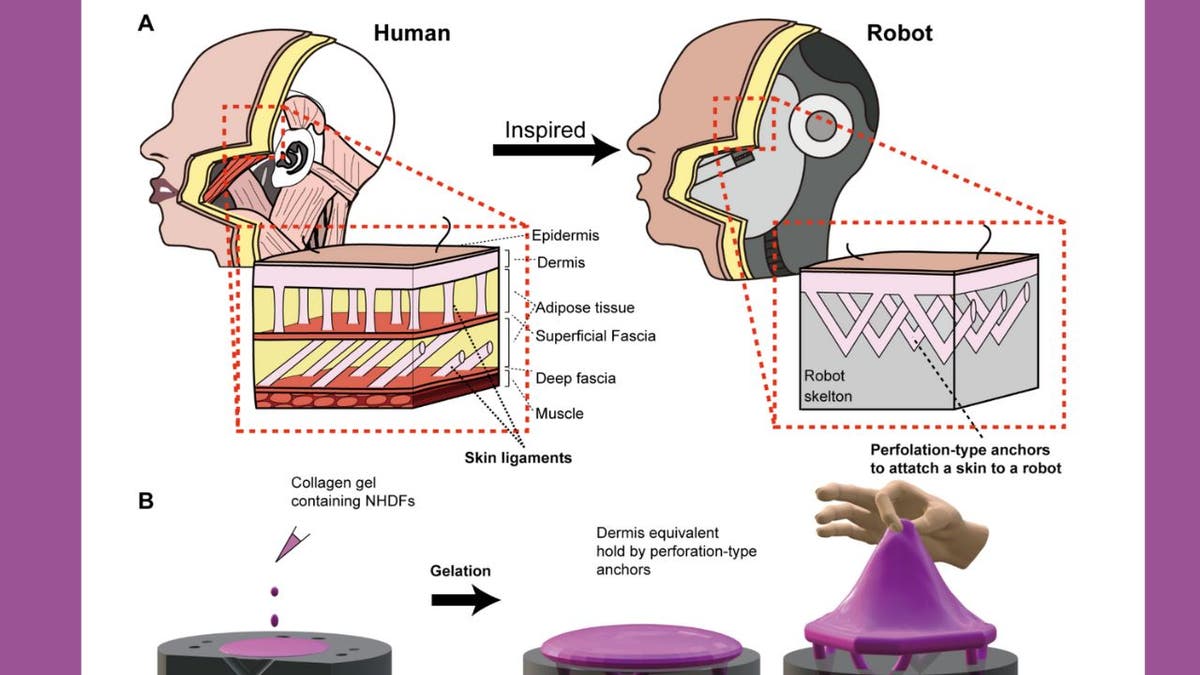

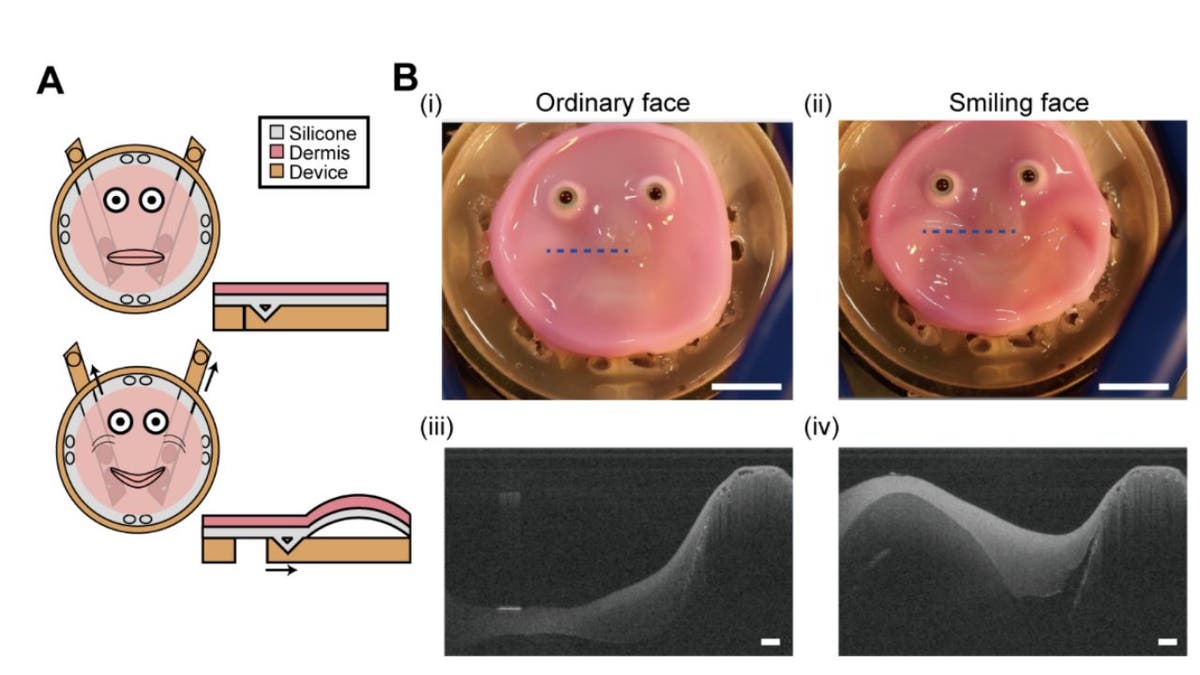

Illustration of the tissue-fixation method (Shoji Takeuchi’s research group at the University of Tokyo) (Kurt “CyberGuy” Knutsson)

How does it work?

The secret lies in something called “perforation-type anchors.” These clever little structures are inspired by the way our own skin attaches to the tissues underneath. Essentially, they allow living tissue to grow into and around the robot’s surface, creating a secure bond.

The researchers used a combination of human dermal fibroblasts and human epidermal keratinocytes to create this living skin. They cultured these cells in a carefully prepared mixture of collagen and growth media, allowing the tissue to mature and form a structure similar to human skin.

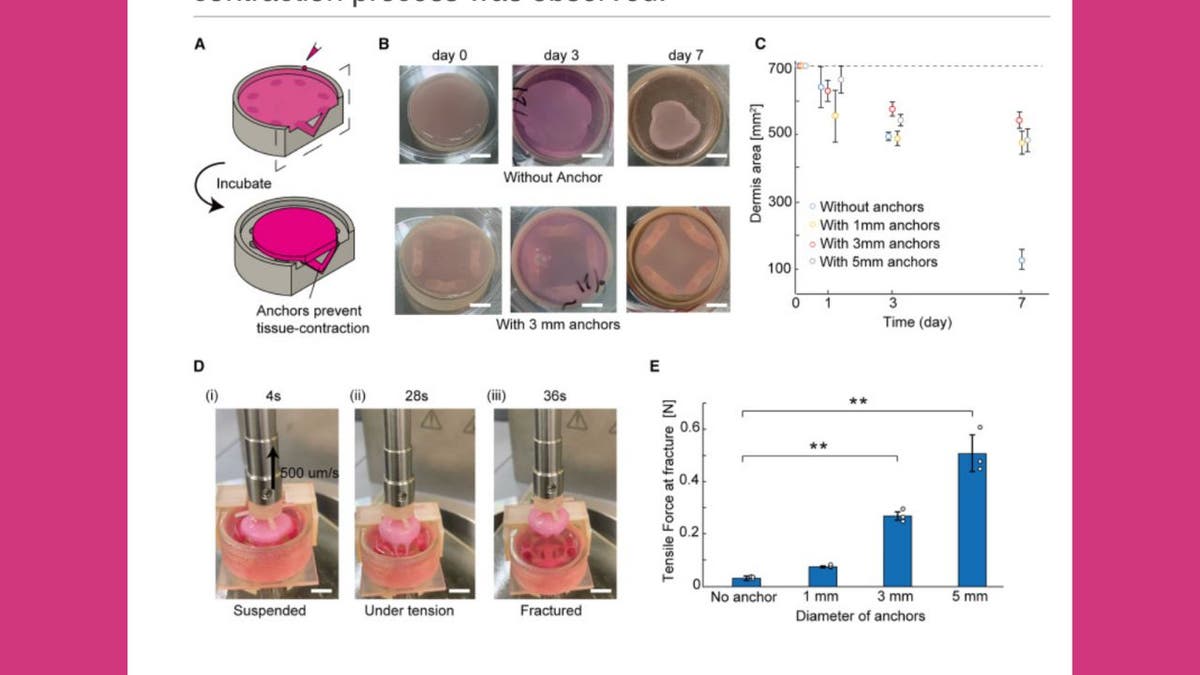

Evaluation of the perforation-type anchors to hold tissue (Shoji Takeuchi’s research group at the University of Tokyo) (Kurt “CyberGuy” Knutsson)

The minds behind the innovation

This groundbreaking research was conducted at the Biohybrid Systems Laboratory at the University of Tokyo, led by Professor Shoji Takeuchi. The team’s work is pushing the boundaries of what’s possible in robotics and bioengineering.

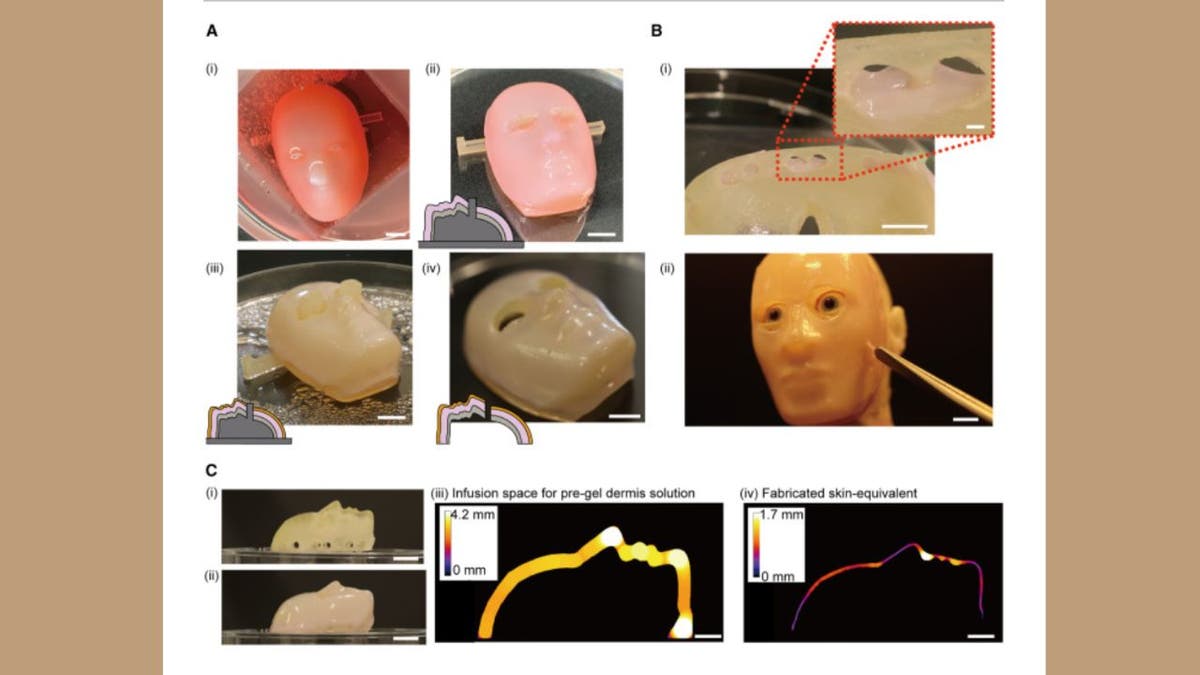

Demonstration of the perforation-type anchors to cover the facial device (Shoji Takeuchi’s research group at the University of Tokyo) (Kurt “CyberGuy” Knutsson)

Building a face that can smile

One of the coolest demonstrations of this technology is a robotic face covered with living tissue that can actually smile. The researchers created a system where the skin-covered surface can be moved to mimic facial expressions.

To achieve this, they designed a robotic face with multiple parts, including a base with perforation-type anchors for both a silicone layer and the dermis equivalent. This silicone layer mimics subcutaneous tissue, contributing to a more realistic smiling expression.

The smiling robotic face (Shoji Takeuchi’s research group at the University of Tokyo) (Kurt “CyberGuy” Knutsson)

Challenges and solutions

Getting living tissue to stick to a robot isn’t as easy as it sounds. The team had to overcome issues like making sure the tissue could grow into the anchor points properly. They even used plasma treatment to make the surface more “tissue-friendly.”

The researchers also had to consider the size and arrangement of the anchors. Through finite element method simulations, they found that larger anchors provided more tensile strength, but there was a trade-off with the area they occupied.

Engineered skin tissue (Shoji Takeuchi’s research group at the University of Tokyo) (Kurt “CyberGuy” Knutsson)

Why this matters

This technology could be a game-changer for fields like prosthetics and humanoid robotics. Imagine prosthetic limbs that look and feel just like real skin or robots that can interact with humans in more natural ways.

The ability to create skin that can move and express emotions opens up new possibilities for human-robot interaction. It could lead to more empathetic and relatable robotic assistants in various fields, from health care to customer service.

The smiling robotic face (Shoji Takeuchi’s research group at the University of Tokyo) (Kurt “CyberGuy” Knutsson)

While we’re still a long way from seeing robots with fully functional living skin walking among us, this research from the University of Tokyo opens up exciting possibilities. It’s a step towards creating robots that blur the line between machines and living organisms.

As we continue to advance in this field, we’ll need to grapple with the technical challenges and ethical implications of creating increasingly lifelike machines. Future research might focus on improving the durability of living skin, enhancing its ability to heal or even incorporating sensory capabilities. One thing’s for sure: The future of robotics is looking more human than ever.